Explore cutting-edge liquid cooling solutions designed for efficiency and performance. This forum dives into compact, high-performance liquid cooling systems, offering insights on installation, maintenance, and innovation. Whether you're a tech enthusiast or a professional, join the conversation to stay ahead in thermal management.

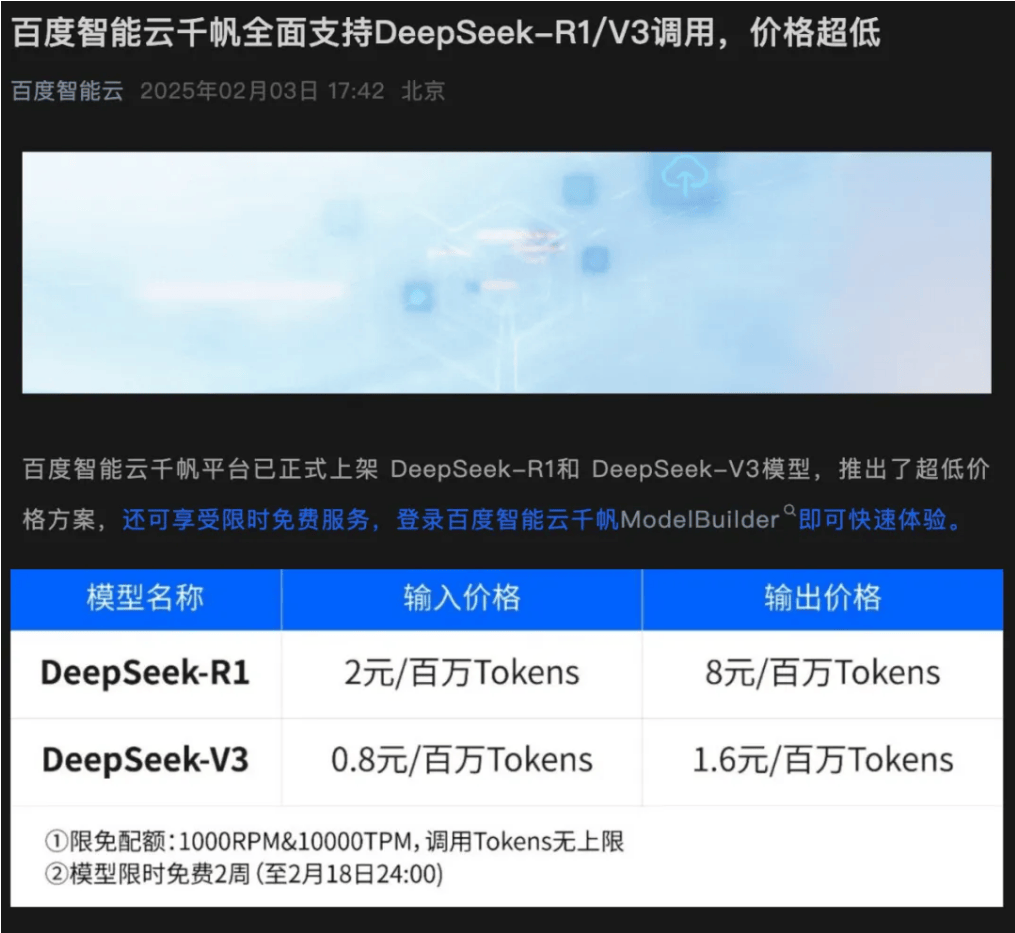

On the evening of February 3, Baidu Intelligent Cloud announced that the Baidu Intelligent Cloud Chifan platform has officially shelved DeepSeek-R1 and DeepSeek-V3 models, launched a super low price plan, but also enjoy a limited time free service, log in to the Baidu Intelligent Cloud Chifan ModelBuilder can quickly experience.

Baidu Intelligent Cloud said that the access to the model has been fully integrated Chifan inference link, integrated Baidu exclusive content security arithmetic, to achieve the model security enhancement and enterprise-class high availability guarantee, while supporting perfect BLS log analysis and BCM alarms, to help users safely and stably build intelligent applications. It is understood that the model is free for 2 weeks for a limited time, and the deadline is 24:00 on February 18th.The limited quota is 1000RPM & 10000TPM, and there is no upper limit for calling Tokens. It is reported, logged into the Baidu Intelligent Cloud website, just click on the online experience, real-name authentication, in the “Model Square” can call DeepSeek-R1 and DeepSeek-V3 model. According to reports, the model is self-researched by Hangzhou DeepSeek Artificial Intelligence Basic Technology Research Co., Ltd. and has excellent performance in math, code, natural language reasoning and other tasks.

Also on February 3, AliCloud announced that AliCloud PAI Model Gallery supports one-click deployment of DeepSeek-V3 and DeepSeek-R1 on the cloud. on this platform, users can realize the whole process from training to deployment to inference with zero code, simplifying the model development process and bringing developers and enterprise users a faster, more efficient and more convenient AI development and application experience for developers and enterprise users.

With DeepSeek going live in December 2024 and open-sourcing DeepSeek V3/R1/Janus Pro, a number of platforms such as Huawei Cloud, Tencent Cloud, 360 Digital Security, and Cloud Axis Technology ZStack have recently announced that they are going live with DeepSeek big models.

On February 1, according to the official public number of Huawei Cloud, DeepSeek-R1 open source triggered the attention of global users and developers. After days of attack by the Silicon Mobility and Huawei Cloud teams, the two sides are now jointly debuting and going live with DeepSeek R1/V3 inference service based on Huawei Cloud's Rise Cloud service. Thanks to the self-developed inference acceleration engine, the DeepSeek models deployed by Silicon Mobility and Huawei Cloud Rise Cloud Service can obtain the same effect as the models deployed by high-end GPUs in the world.

On February 2, Tencent Cloud announced that the DeepSeek-R1 large model is deployed to Tencent Cloud “HAI” with a single click, and developers can access the call in just 3 minutes. Simply put, through the “HAI”, developers can eliminate the tedious steps of buying cards, installing drivers, matching networks, matching storage, installing environments, installing frameworks, downloading models, and so on, and can call DeepSeek-R1 models in just two steps.

February 2, 360 digital security said, recently, 360 Digital Security Group announced that its security model officially access DeepSeek, will be DeepSeek for the security model base, play 360 security big data advantages, through the continuation of reinforcement learning and other technical means, training “DeepSeek version of the “DeepSeek version of the security model, so that security can really do “autopilot”.

In addition, on January 31, NVIDIA, Amazon and Microsoft, three American technology giants announced on the same day access to the advanced large language model DeepSeek-R1 developed by Chinese companies.

Among them, NVIDIA announced that the DeepSeek-R1 model was already available for NVIDIA NIM. On the same day, Amazon also said that DeepSeek-R1 model can be used on Amazon Web Services. Microsoft also announced on the same day that DeepSeek-R1 was officially incorporated into Azure AI Foundry as part of that enterprise-grade AI services platform.

DeepSeek-R1 is recognized as one of the most advanced large language models available, providing high-quality language processing.